Author: Editor

-

SkyReels – AI short drama creation platform launched by Kunlun Wanwei | AI tool set

©️Copyright Statement: If there is no special statement, the copyright of all articles on this site belongs toAI ToolsetOriginal and all, without permission, no individual, media, website, or group may reproduce, plagiarize or otherwise copy and publish the content of this website, or create a mirror on a server that is not the one to which our website belongs. Otherwise, our site will reserve the right to pursue relevant legal responsibilities in accordance with the law. -

Yuanjing – AI video generation tool, supporting multimodal creative storyboard creation services | AI tool set

What is Yuanjing

The meta-mirror is based on the human-computer symbiosis engineAI video creationTools that support the efficient creation of finished videos from creative inspiration. Based on functions such as automated script generation, character style unification, multi-modal fusion and intelligent workflow, Yuanjing greatly improves creative efficiency and meets the needs of many industries such as short video, advertising, education, film and television. Yuanjing’s ability to generate complete videos with one click optimizes the professionalism and personalization of films, and promotes the informatization and intelligent upgrade of creative content.

The main functions of the metamirror

- Creative video script generation: Starting from inspiration, quickly generate scripts, support character customization and creative expansion, meet different video duration needs (such as 15 seconds, 30 seconds, 1 minute), and greatly improve creative efficiency.

- Multimodal creative storyboard design: Provides a comprehensive storyboard design to generate storyboard pictures, videos and music, ensures the unity of style and emotions, and enhances the consistency and expressiveness of the content.

- Storyboard one-click movie engine: Automatically synthesize multi-story videos, intelligently complete content, support subtitles and narration generation, realize rapid filming, and optimize creative process.

How to use metamirror

- Access and Register: Visiting the YuanjingOfficial website. Follow the prompts to complete registration and login.

- Submit creative requirements: Submit video creation requirements on the platform. include:

- The theme, style, and duration of the video (such as 15 seconds, 30 seconds, 1 minute).

- Character settings (such as character image, sound style).

- Creative direction (such as storyline, emotional expression).

- Script generation: The platform quickly generates creative video scripts based on needs.

- Storyboard design: The metamirror generates a multimodal storyboard design based on the script, including storyboard diagrams, videos and music. Ensure that the character’s visual and auditory styles are unified in the storyboard.

- One-click to form a piece: The platform automatically synthesizes multi-story videos and intelligently completes the content. Support subtitles and narration generation, and generate complete videos with one click.

- Output and optimization: The generated film is directly used for release. Or further optimize and adjust as needed, such as modifying subtitles, adjusting music, etc.

Yuanjing’s product pricing

- Gold Membership: 179 yuan/month, earn 1300 points per month, and can generate 4,680 storyboard pictures or 65 5-second storyboard videos. A single video has a maximum of 60 seconds, a fast generation channel, a complete video has no watermark, new functions are preferred to experience, high-quality video generation, and image support is enhanced.

- Platinum Membership: 349 yuan/month, earn 2,600 points per month, and can generate 9,360 storyboard pictures or 130 5-second storyboard videos. A single video has a maximum length of 60 seconds, and an exclusive fast generation channel, including all functions of the Golden Member.

- Diamond Membership: 829 yuan/month, earn 6,500 points per month, and can generate 23,400 storyboard pictures or 325 5-second storyboard videos. A single video has a maximum of 60 seconds, and an exclusive fast generation channel, including all functions of Diamond Members.

Application scenarios of Yuanjing

- Short video creation: Individual creators and brand marketing quickly generate creative scripts and films to meet users’ needs for efficient content production.

- Advertising production: Provides efficient video creation solutions for the advertising industry, supports customized scripts and storyboard design, quickly generates advertising videos that meet the brand tone, and adapts to multiple advertising scenarios.

- Film and television production: In the field of film and television, help creators quickly realize creative ideas and improve production efficiency.

- Educational content production: Use video creation in the field of education, such as teaching short films, knowledge and science videos, etc. to quickly output video content suitable for teaching scenarios, and improve the production efficiency of teaching resources.

- Government Affairs Propaganda: Propaganda video production by government agencies, such as policy interpretation, public welfare publicity, etc., supports the rapid generation of video content that meets the theme and promotes the dissemination and popularization of government information.

© Copyright Statement

Copyright of this website article belongs to AI Toolset All, any form of reproduction is prohibited without permission. -

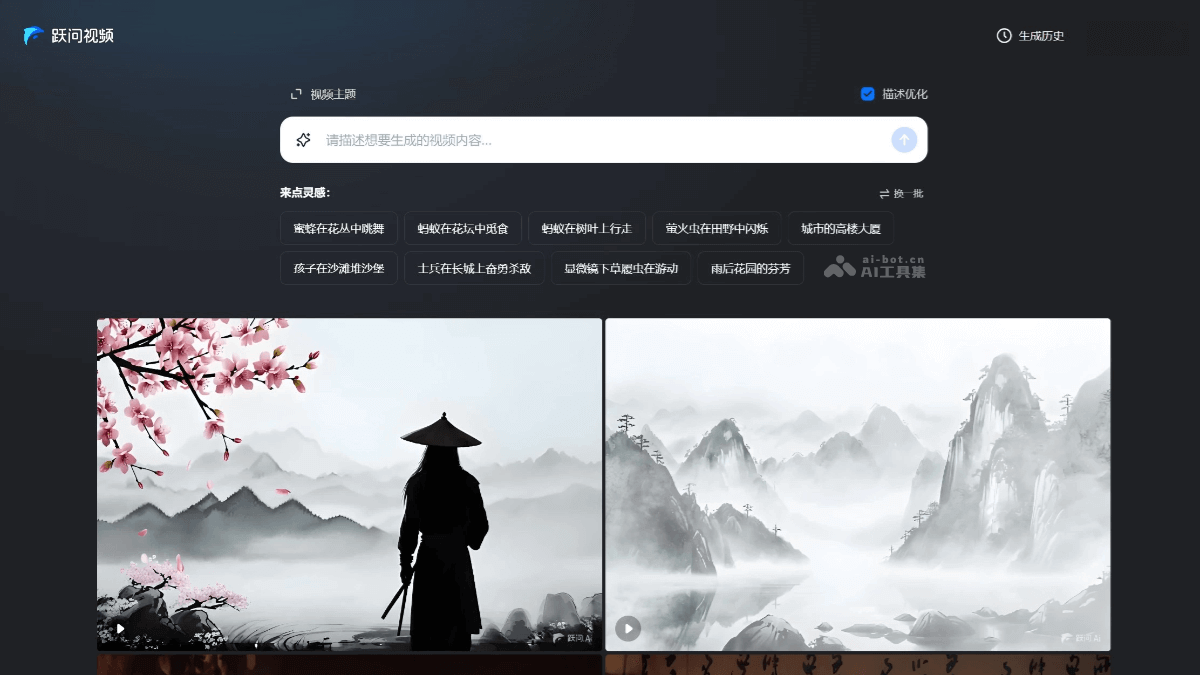

Yuewen Video – AI video generation tool launched by Jieyuexingchen | AI tool set

What is the video

Yuewen Video was launched by JieyuexingchenAI video generation toolsupports a variety of video creation themes, such as city, science fiction, nature, sports cars, food, etc. Users can get creative inspiration through the video examples provided by Yuewen Video, and click the example to automatically enter the corresponding prompt words. Yuewen Video also provides video description optimization services. After selecting a video theme or entering a topic, you can optimize the video description in one click to help improve the content quality and support the generation of up to 10 seconds of video. The internal test experience has been opened.

Yuewen video generation demonstration

Demo 1:The video shows an ink-style picture with continuous mountains and vast sky in the background. In the foreground of the picture, there is a silhouette of a mysterious figure wearing a hat standing quietly. On the surrounding cherry blossom branches, pink cherry blossoms gently fall in the breeze, adding to the poetic and dynamic feeling of the picture. The entire video is shot statically, with clear pictures and full of the charm of Chinese classical art. (Official prompt word)

Demo 2:A little monster jumped out of a mobile phone screen and danced on the table (official prompt word)

Demo 3:In the video, a magpies are looking for food between the branches in front of the red wall of the Forbidden City. The background is a red wall covered with snow, adding to the quiet beauty of the picture. The magpies move flexibly among the branches, occasionally staying to observe around them. The entire scene is shot through a fixed lens, presenting a quiet and realistic style, carefully capturing the natural scene in winter. (Official prompt word)

How to use Yuewen Video

- Visit the official website: Visit Yuewen VideoOfficial websiteregister and log in to your account.

- Enter a video description: Enter the video content you want to generate in the input box. If you have no inspiration, you can click on the video theme or refer to the inspiration content. You can click to change a batch to explore more.

- Description Optimization: The user can turn on or off the description optimization function. After turning on, entering the theme can automatically help optimize the prompt content, and generate videos more accurately.

- Internal test application: The internal test application interface will pop up when submitting the prompt content. You can fill in the internal test information and wait for the application to be approved.

Yuewen Video Application Scenario

- Education and Learning:Students and teachers can use Yuewen videos to assist teaching, such as learning biological knowledge by watching animal videos, or learning art history through art exhibition videos.

- Creative Inspiration:Artists, designers and content creators can get inspiration by watching Yuewen videos, such as getting design inspiration from natural landscapes or getting creative ideas from sports videos.

- Entertainment and leisure:Users can watch Yuewen video during breaks and enjoy various interesting video content, such as animal behaviors, natural scenery, etc., to relax.

- Marketing and advertising:Companies can use Yuewen Video to optimize their video content, improve search engine rankings, and attract more potential customers.

© Copyright Statement

Copyright of this website article belongs to AI Toolset All, any form of reproduction is prohibited without permission. -

Tavus – AI video generation platform that supports digital human cloning and real-time conversations | AI toolset

What is Tavus

Tavus is advanced personalizedAI video generation platformsupports the creation of highly realisticDigital human cloningReal-time conversation with AI videos. Based on the advanced Phoenix-2 model and dialogue video interface (CVI), Tavus can achieve natural interactions and real-time conversations close to humans. The platform provides APIs and developer tools, so that enterprises can quickly deploy AI video products in marketing, education, and customer service scenarios. Tavus not only improves the user experience, but also ensures the safe and compliant use of digital cloning.

The main features of Tavus

- AI video generation: Tavus’ video generation supports the generation of videos from scripts through AI digital cloning. It can create content quickly without actually recording videos.

- Real-time conversational video:Tavus’s conversational video interface (CVI) provides a new way of interaction, supporting digital cloning to communicate with users in real time, with a delay of less than one second.

- AI Model: Tavus’ Phoenix-2 model is one of its core technologies, which can generate unusually realistic digital clones from users’ short video clips. The cloning not only looks real, but also imitates the user’s voice and facial expressions, providing a highly personalized video experience for various applications.

- Developer documentation and tools:Tavus provides comprehensive developer documentation and tools to help developers register accounts, obtain API keys, and use the developer portal to try and integrate digital cloning. These resources allow developers to easily integrate Tavus’ AI video technology into their applications to accelerate product development processes.

Tavus’s project address

The technical principles of Tavus

- Phoenix-2 model: It is a self-developed model of Tavus, combining 3D models and 2D generative adversarial networks (GANs) to generate 1-2 minutes of realistic short videos.

- Real-time dialogue processing: Tavus’s conversational video interface (CVI) allows digital clones to communicate in real time with extremely low latency (less than a second). This involves advanced speech recognition, visual processing and dialogue awareness to achieve a rich, natural dialogue experience.

- Natural interaction: Tavus’ system designed the characteristics of natural interaction, including dialogue-based large language model (LLM), visual recognition, end-of-turn detection and interruptibility, and the conversation with digital clones feels real.

- Modular construction:Tavus provides a modular construction method that supports developers to integrate custom language models or text-to-speech (TTS) systems according to their needs and use cases.

- Easy-to-deploy solution:Tavus provides pre-built WebRTC solutions that developers can quickly launch and deploy digital cloning meetings.

How to use Tavus

- Register and get API Key: Visit TavusOfficial websiteBy registering an account, developers can obtain API Key.

- Create Replica: Create a Replica, a digital twin, through the developer portal, using the built-in camera or upload existing video materials. Make sure the video is in line with Tavus’s recording guide, such as maintaining eye contact, proper gestures, positive tone, and reading an authorization statement in the video.

- Writing code: Using Python and Tavus APIs, you can quickly start conversations. First, install it

requestsandpython-dotenvand then use the API Key to initiate a POST request to Tavus’ API endpoint. Need to prepare a containingreplica_id、conversation_name、conversational_contextas well aspropertiesPayload with other parameters. - Customize and train Replica: Train your Replica based on your brand style and voice. You can provide custom variables to adjust their tone, style, and behavior to make sure it reflects your brand image.

- Generate video: Replica is set up, just provide the text script. Tavus’ AI will take over and generate personalized videos based on the context of the interaction.

- Cloning real people or selecting existing images: You can clone real characters to create highly realistic Replica, or choose from images provided by Tavus.

Tavus application scenarios

- Customer Service: Enterprises can use Tavus to improve customer service experience, provide instant and personalized services through AI video conversations, and improve customer satisfaction.

- Personalized marketing: Marketers can use Tavus’ technology to create highly personalized videos, customize them according to audience preferences, and enhance marketing effectiveness.

- Virtual Assistant: Tavus can act as a virtual assistant to participate in daily conversations, such as ZOOM video conferences, and provide real-time communication and information sharing.

- Education and training: In the field of education, Tavus can serve as a digital twin for teachers or trainers, providing personalized learning experiences and training content.

- Product Demonstration and Introduction: Companies can use Tavus to generate product demonstration videos and introduce product features and advantages to potential customers through realistic AI images.

© Copyright Statement

Copyright of this website article belongs to AI Toolset All, any form of reproduction is prohibited without permission. -

Viggle – AI tool for generating controllable character dynamic videos | AI tool set

©️Copyright Statement: If there is no special statement, the copyright of all articles on this site belongs toAI ToolsetOriginal and all, without permission, no individual, media, website, or group may reproduce, plagiarize or otherwise copy and publish the content of this website, or create a mirror on a server that is not the one to which our website belongs. Otherwise, our site will reserve the right to pursue relevant legal responsibilities in accordance with the law. -

Vozo – Multifunctional AI video editing tool | AI tool set

©️Copyright Statement: If there is no special statement, the copyright of all articles on this site belongs toAI ToolsetOriginal and all, without permission, no individual, media, website, or group may reproduce, plagiarize or otherwise copy and publish the content of this website, or create a mirror on a server that is not the one to which our website belongs. Otherwise, our site will reserve the right to pursue relevant legal responsibilities in accordance with the law. -

Hedra – AI lip-on video generation tool that can generate 30-second videos | AI Toolset

©️Copyright Statement: If there is no special statement, the copyright of all articles on this site belongs toAI ToolsetOriginal and all, without permission, no individual, media, website, or group may reproduce, plagiarize or otherwise copy and publish the content of this website, or create a mirror on a server that is not the one to which our website belongs. Otherwise, our site will reserve the right to pursue relevant legal responsibilities in accordance with the law. -

Jichuang AI – a one-stop intelligent creation platform launched by Douyin, supporting video, graphics and live broadcast creation | AI Toolset

©️Copyright Statement: If there is no special statement, the copyright of all articles on this site belongs toAI ToolsetOriginal and all, without permission, no individual, media, website, or group may reproduce, plagiarize or otherwise copy and publish the content of this website, or create a mirror on a server that is not the one to which our website belongs. Otherwise, our site will reserve the right to pursue relevant legal responsibilities in accordance with the law. -

Miochuang – A simple and easy-to-use AI video creation platform | AI tool set

What is Mixed

Miochuang (original one frame Miochuang) is an AI content creation platform based on Miochuang AIGC engine, providing 2 million creators with text sequels, text to voice, text and biographical pictures,Pictures to videosAI films,Digital People Broadcastand other creative services, including Miochuang Digital Man, Miochuang AI Help Writer, Miochuang Picture and Text Transfer Video, Miochuang AI Video, Miochuang AI Voice, Miochuang AI Painting, etc.

What can Miochuang do?

1. Create a digital person in seconds

Intelligent digital human broadcasting platform, enter copywriting, and generate “live person” marketing videos with one click. AI digital people are based on AI technologies such as digital twins, which restore the image, movement, expression, and voice of the real person 1:1, and infinitely approach the real person’s digital clone. You can even restore the real person image 1:1 + 1:1 to restore the real person tone, customize your digital person image and voice, help you quickly build your corporate and personal IP and help marketing growth.

2. Size and text to video

As a leading intelligent content creation platform in China, input copy and intelligent matching, AI will automatically match the screen according to semantics, quickly realize the production from copywriting to video. It not only supports copywriting and article link input, but also supports PPT file import and one-click video production. AI can perfectly realize the functions of picture matching, smart dubbing, smart subtitles, etc. While efficiently producing content, it supports very flexible detailed adjustments, including manuscripts, music, dubbing, rough cutting, LOGO, subtitles, speech speed and other functions. Massive online materials can be replaced and selected at will, and one-click picture and text are formed into films.

3. Miochuang AI Help Write

Intelligent copywriting platform, input inspiration, and the literary thoughts are pouring out, making a “hand” come true. Miochuang provides four types of AI help writing templates for short videos, marketing, live broadcasts, and style copywriting, which fully meets user needs and can even directly output live scripts, greatly improving the efficiency of operations and anchor students. Short video content, marketing copywriting, and Miochuang AI help can help you solve all the work.

4. Create AI painting in seconds

Intelligent painting generation platform, input ideas, inspiration emerges, and releases imagination. Just enter the keywords of the painting text to describe the scene where you want to draw AI, select modifiers, artistic style, and artist style, and you can automatically generate exquisite painting works with just one click of AI.

5. Create text to voice in seconds

Miochuang has its unique AI intelligent dubbing technology, which automatically completes dubbing with one click input text, and includes popular pronunciators on the entire network. 56 types of AI dubbing are available, switching in Chinese and English at will. The output content is like a real person, providing creators with rich choice space.

AIGC creation platform Mianchuang relies on powerful AI technology to recognize text semantics, automatically match material with lenses, realize “automated video editing”, and create 100 “high-quality original videos” in one click, easily produce 100 “high-quality original videos” a day.

6. PPT to video

PPT to video is a new feature launched by Miochuang to support users to upload local PPT and PPTX files, which can instantly convert static slide content into dynamic videos.

- Remarks are analyzed into documentary: Users can enter text in the comments bar of PPT, which will be automatically recognized and converted into copy content in the video.

- Matrixed video generation: Through intelligent technology, the Miochuang platform can matrix PPT content and generate videos, while retaining all text and pictures in PPT, making the video content more professional and accurate.

7.AI Video

The AI video function has been fully upgraded, and two new features of literary and graphic videos have been added, supporting a variety of video durations and proportions.

- Convert text description or picture material into video: Provide text description or picture material to convert it into visual works.

- Multiple video proportional options: The platform provides a variety of video ratio options such as 16:9, 9:16, 4:3, etc. to meet application needs in different scenarios.

- Fast generation and consistency assurance: Ensure the professionalism and consistency of the output content.

Price of products from Miochuang

Currently, it is completely fine to use Miochuang for free, and of course members can enjoy more rights. If you have higher demand for use in Miochuang, you can activate a paid version, with the exclusive channel of AI toolset discount of 18.8 yuan (valid within 7 days after registration), and the deadline is 2023.12.31.

Application scenarios of Miochuang

- Self-media content creation: Self-media authors can use the Miochuang platform to quickly convert text articles into attractive video content, improving fan interaction and content dissemination effects.

- Corporate Marketing Promotion: Enterprises can use the Miochuang platform to quickly generate marketing videos such as product introductions, brand promotions, etc. to enhance brand image and market influence.

- Educational training: Educational institutions and individual teachers can use the Michuang platform to convert teaching materials into vivid video courses to improve learning experience and teaching effect.

- News Media: News organizations can use the Creative Platform to quickly convert text news into video reports to adapt to the information acquisition habits of modern audiences.

- Personal creation: Ordinary users can use the simple and easy-to-use video creation tools of the Miochuang platform to achieve rapid visualization of personal creativity.

- Social Media Operations: Social media operators can use the Miochuang platform to quickly generate video content suitable for each platform, improving account activity and influence.

-

Sora – OpenAI launches AI text-to-video generation model | AI toolset

What is Sora

Sora was developed by OpenAIAI video generationModels, with the ability to convert text descriptions into videos, can create video scenes that are both realistic and imaginative. The model focuses on simulating the movement of the physical world and aims to help people solve problems that require real-world interactions. Compared withPika、Runway、PixVerse、Morph Studio、GenmoWith only four or five seconds of AI video tool, Sora can generate videos up to one minute while maintaining visual quality and high restoration of user input. In addition to creating videos from scratch, Sora can also generate animations based on existing still images, or expand and complete existing videos.

It should be noted that although Sora’s functions seem very powerful, they have not been officially opened to the public yet, and OpenAI is conducting red team testing, security checks and optimizations. Currently, there are only introductions, video demos and technical explanations of Sora on the official website of OpenAI, and no video generation tools or APIs can be provided that can be used directly.madewithsora.comThe website collects videos generated by Sora, and interested friends can go and watch them.

The main functions of Sora

- Text-driven video generation:Sora is able to generate video content that matches it based on the detailed text description provided by the user. These descriptions can involve scenes, characters, actions, emotions, and so on.

- Video quality and loyalty: The generated video maintains high-quality visual effects and closely follows the user’s text prompts to ensure that the video content matches the description.

- Simulate the physical world:Sora aims to simulate the motion and physical laws of the real world, making the generated video more visually realistic and able to handle complex scenes and character actions.

- Multi-character and complex scene processing: The model is able to handle video generation tasks that contain multiple characters and complex backgrounds, although in some cases there may be limitations.

- Video expansion and completion: Sora can not only generate videos from scratch, but also animation based on existing still images or video clips, or extend the length of existing videos.

The technical principles of Sora

OpenAI Sora’s technical architecture conjecture

- Text condition generation:The Sora model is able to generate videos based on text prompts, which is achieved by combining text information with video content. This capability allows the model to understand the user’s description and generate video clips that match it.

- Visual patches: Sora breaks down video and images into small blocks of visual blocks as low-dimensional representations of video and images. This approach allows the model to process and understand complex visual information while maintaining computational efficiency.

- Video compression network: Before generating video, Sora uses a video compression network to compress raw video data into a low-dimensional potential space. This compression process reduces the complexity of the data and makes it easier for the model to learn and generate video content.

- Spacetime patches: After video compression, Sora further decomposes the video representation into a series of spatial time blocks as input to the model, so that the model can process and understand the spatiotemporal characteristics of the video.

- Diffusion Model: Sora adopts diffusion model (based on Transformer architectureItmodel) as its core generation mechanism. The diffusion model generates content by gradually removing noise and predicting the original data. In video generation, this means that the model will gradually restore clear video frames from a series of noise patches.

- Transformer architecture:Sora uses the Transformer architecture to handle spatial time blocks. Transformer is a powerful neural network model that excels in processing sequence data such as text and time series. In Sora, Transformer is used to understand and generate video frame sequences.

- Large-scale training:Sora trains on large-scale video datasets, which enables the model to learn rich visual patterns and dynamic changes. Large-scale training helps improve the generalization ability of the model, enabling it to generate diverse and high-quality video content.

- Text to video generation: Sora converts text prompts into detailed video descriptions by training a descriptive subtitle generator. These descriptions are then used to guide the video generation process, ensuring that the generated video content matches the text description.

- Zero sample learning:Sora is able to perform specific tasks through zero sample learning, such as simulating a specific style of video or game. That is, the model can generate corresponding video content based on text prompts without direct training data.

- Simulate the physical world: Sora demonstrated the ability to simulate the physical world during training, such as 3D consistency and object durability, indicating that the model can understand and simulate physical laws in the real world to a certain extent.

Sora application scenarios

- Social media short film production: Content creators quickly create attractive short videos for sharing on social media platforms. Creators can easily convert their ideas into video without investing a lot of time and resources to learn video editing software. Sora can also generate video content suitable for specific formats and styles based on the characteristics of social media platforms (such as short videos, live broadcasts, etc.).

- Advertising and marketing:Quickly generate advertising videos to help brands convey core information in a short period of time. Sora can generate animations with strong visual impact, or simulate real scenes to show product features. In addition, Sora can help businesses test different advertising ideas and find the most effective marketing strategies through rapid iteration.

- Prototyping and concept visualization: For designers and engineers, Sora can serve as a powerful tool to visualize their designs and concepts. For example, architects can use Sora to generate three-dimensional animations of architectural projects, allowing customers to understand design intentions more intuitively. Product designers can use Sora to demonstrate how new products work or user experience processes.

- Film and television production: Assist directors and producers to quickly build storyboards in pre-production, or generate initial visual effects. This can help the team better plan the scene and shots before the actual shots. In addition, Sora can also be used to generate special effects previews, allowing production teams to explore different visual effects on a limited budget.

- Education and training:Sora can be used to create educational videos that help students better understand complex concepts. For example, it can generate simulated videos of scientific experiments, or reproduce historical events, making the learning process more vivid and intuitive.

How to use Sora

OpenAI Sora currently does not provide an access to public access, and the model is being evaluated by the Red Team (security experts) and is only tested and evaluated by a few visual artists, designers and filmmakers. OpenAI does not specify a specific timetable for wider public availability, but it may be sometime in 2024. To gain access now, individuals need to be eligible according to the expert criteria defined by OpenAI, including relevant professional groups involved in assessing the usefulness of the model and risk mitigation strategies.